The FTC’s punishment of a facial recognition app could be the future of AI regulation

Big Tech could be forced to delete AI algorithms created from ill-gotten data.

Published:

Updated:

Related Articles

-

-

Big Tech power rankings: Where the 5 giants stand to start 2024

-

The government can read your push notifications

-

Why you usually can’t buy weed with a credit card… for now

-

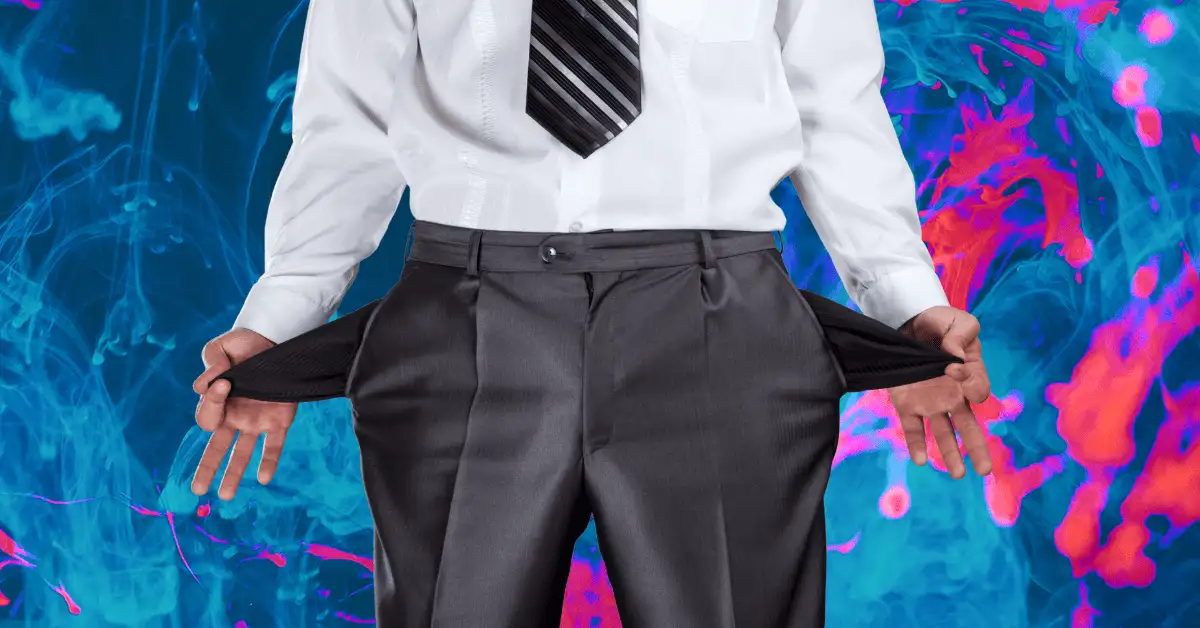

Where there’s smoke, there’s an autonomous vehicle blocking a fire

-

Meta vs. Canada is a long pattern of dismantling news

-

Is the ‘Big One’ about to drop on Amazon?

-

Enshittification just keeps happening

-

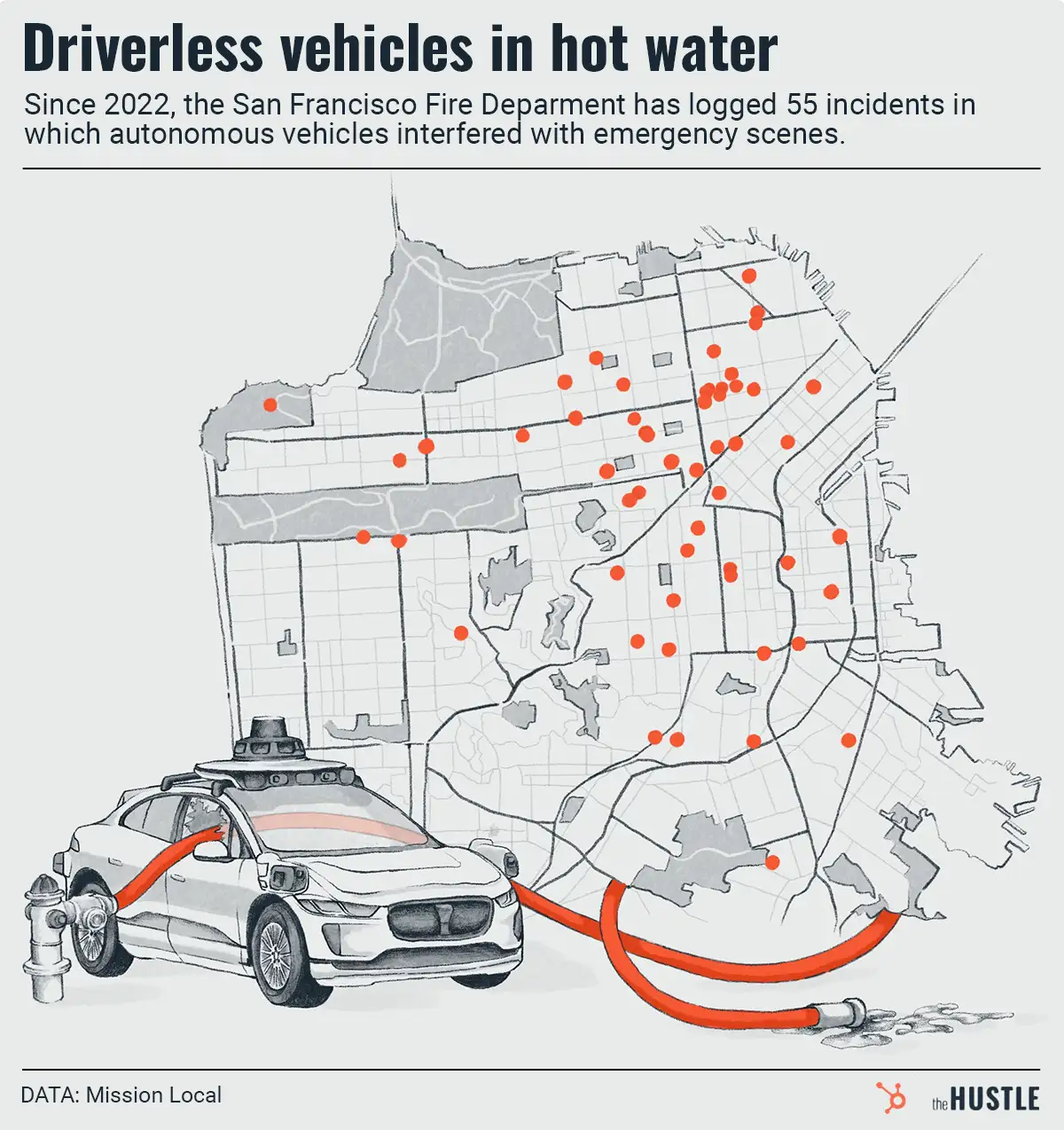

After years of building pricey playgrounds, Big Tech recalibrates

-

TikTok’s new plan to avoid getting banned in the US