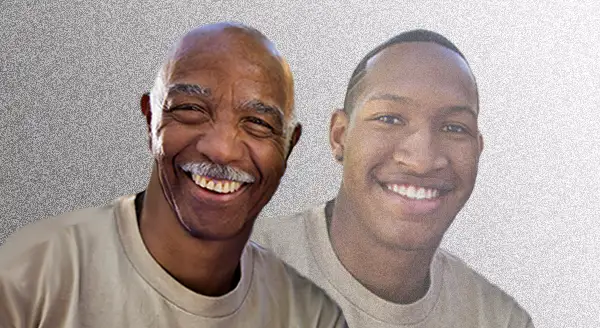

Is the search-by-face function on photo apps the most useful tool of the last 1k years? Probably not. If anything, it shows us that computers are great at doing some things we aren’t.

Like identifying individual faces among many, which 80 years of technological developments have made relatively easy.

But a new study from Mozilla fellow Deborah Raji and Genevieve Fried — a congressional advisor on algorithmic accountability — shows these developments have come at a cost:

Researchers have ‘gradually abandoned asking for people’s consent’

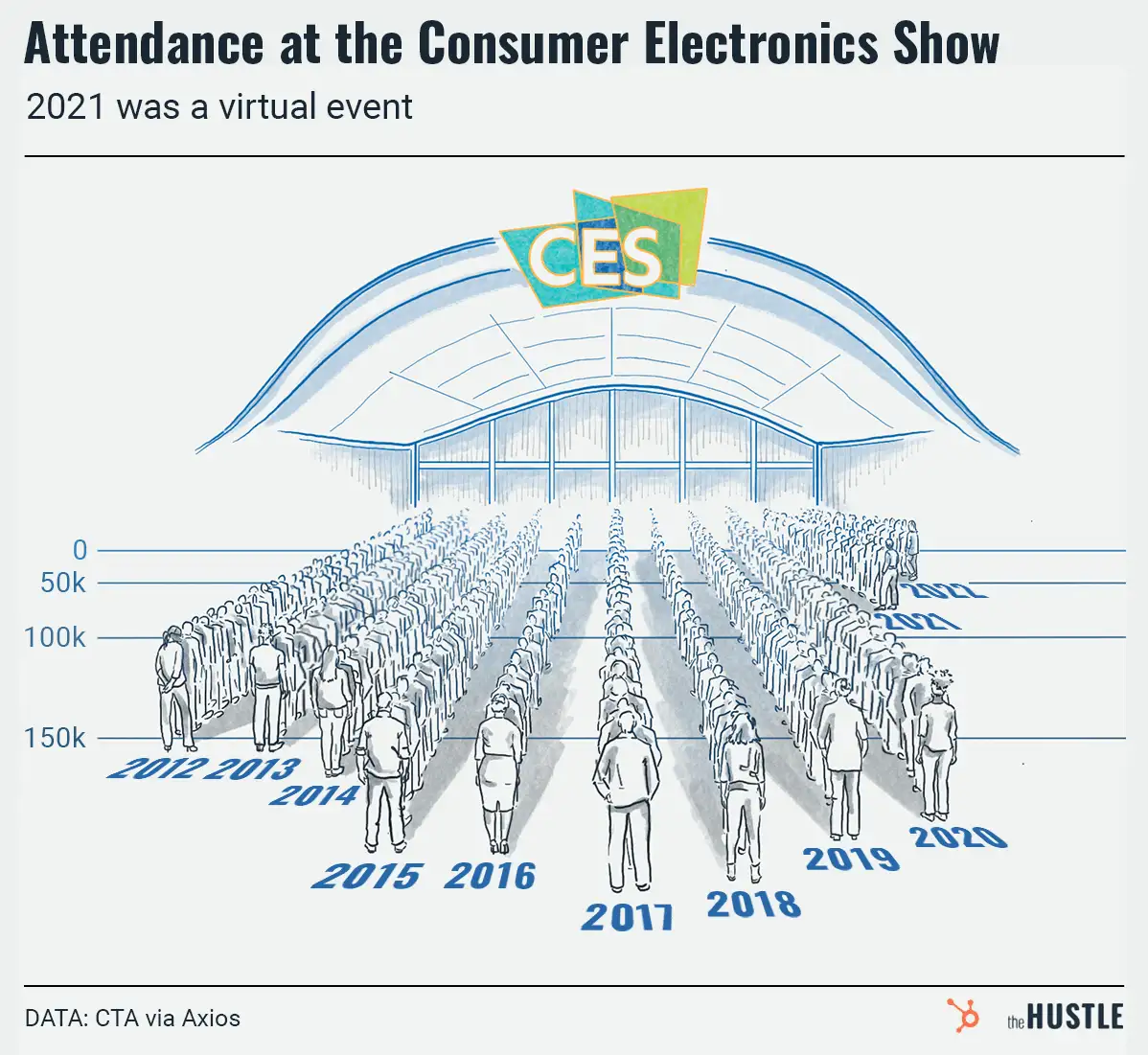

Raji and Fried examined 130 facial recognition datasets across 4 eras and found that consent has been gradually pushed out the window:

- 1960s-1990s: Manual data gathering involved measuring distances between facial features in consented photographs.

- 1990s-2007: The DOD spent $6.5m building the first large face dataset, capturing 14k consented images and sparking academic and commercial interest.

- 2007-2014: Researchers began scraping images from the web without consent.

- 2014-present: In 2014, Facebook used 4m images from 4k profiles to train its DeepFace model, making web-based deep learning the industry standard.

In the first era, consented photo shoots made up 100% of facial recognition data sources. Today, they make up just 8.7%, with web search comprising the rest.

Which is great for the technology, but bad for consent

Training models on millions of images improves the tech through scale, but it also raises serious concerns regarding wide-scale bias and privacy issues.

Studies have shown that white males are falsely matched with mug shots less often than other groups, and a test of Amazon’s Rekognition software showed it misidentified 28 NFL players as criminals.

Microsoft and Stanford released facial datasets without consent…

… that startups and a Chinese military academy obtained before they were removed.

Facial recognition is moving a bit too fast for some: Boston, San Francisco, Oakland, and Portland have outlawed “city use of the surveillance technology,” per CNN.

This seems to be a reasonable measure; at a minimum, it’ll give people less of a reason to put on (and this is actually a thing) anti-facial-recognition makeup: