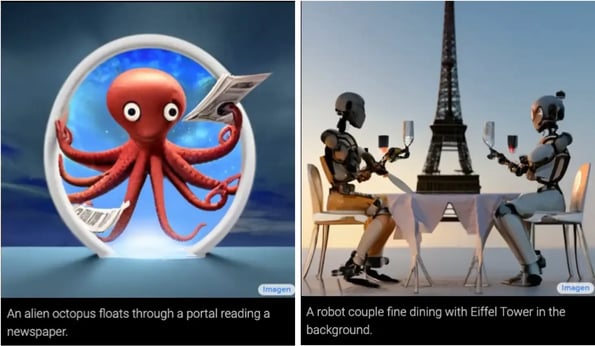

Imagine there was a machine that could take any string of words you type and turn it into an image. For example:

“An alien octopus floats through a portal reading a newspaper,” or “a robot couple fine dining with Eiffel Tower in the background.”

Well, you can stop imagining because this capability exists with advanced text-to-image AI generators.

For these generators to work…

… they need to ingest massive amounts of data. Researchers train the programs on data sets that include images with captions, and after enough practice, they can identify patterns and start spitting out results.

The key players are:

- OpenAI’s DALL-E, which launched in 2021 and released DALL-E 2, in April

- Google’s Imagen, which launched Monday

Beyond pairing images with text, both systems can render images in a wide range of visual styles (e.g., photorealism vs. pencil drawing).

The creative potential is huge…

… but so are the concerns. DALL-E and Imagen are subject to the same underlying biases of the data they’re ingesting. Critics argue that, in the wrong hands, these tools could fuel dangerous misinformation.

In other words, it might be a while before you can get your hands on either system. OpenAI recently opened DALL-E 2 to select beta testers, while Google says Imagen is not yet ready for public use.