Today, we’re gonna get sci-fi and talk about “singularity.”

While the term appears across math and science, we specifically mean “technological singularity.”

‘It was the machines, Sarah’

So, Terminator: Tech company Cyberdyne Systems builds Skynet, an AI-powered defense network. Skynet becomes self-aware, builds an army of machines, enslaves humankind, and sends a cyborg assassin back in time to kill the mother of humanity’s savior.

That’s singularity: when AI becomes smarter than its creators, capable of improving itself and building technology more advanced than we ever could.

Cool, so when will that be?

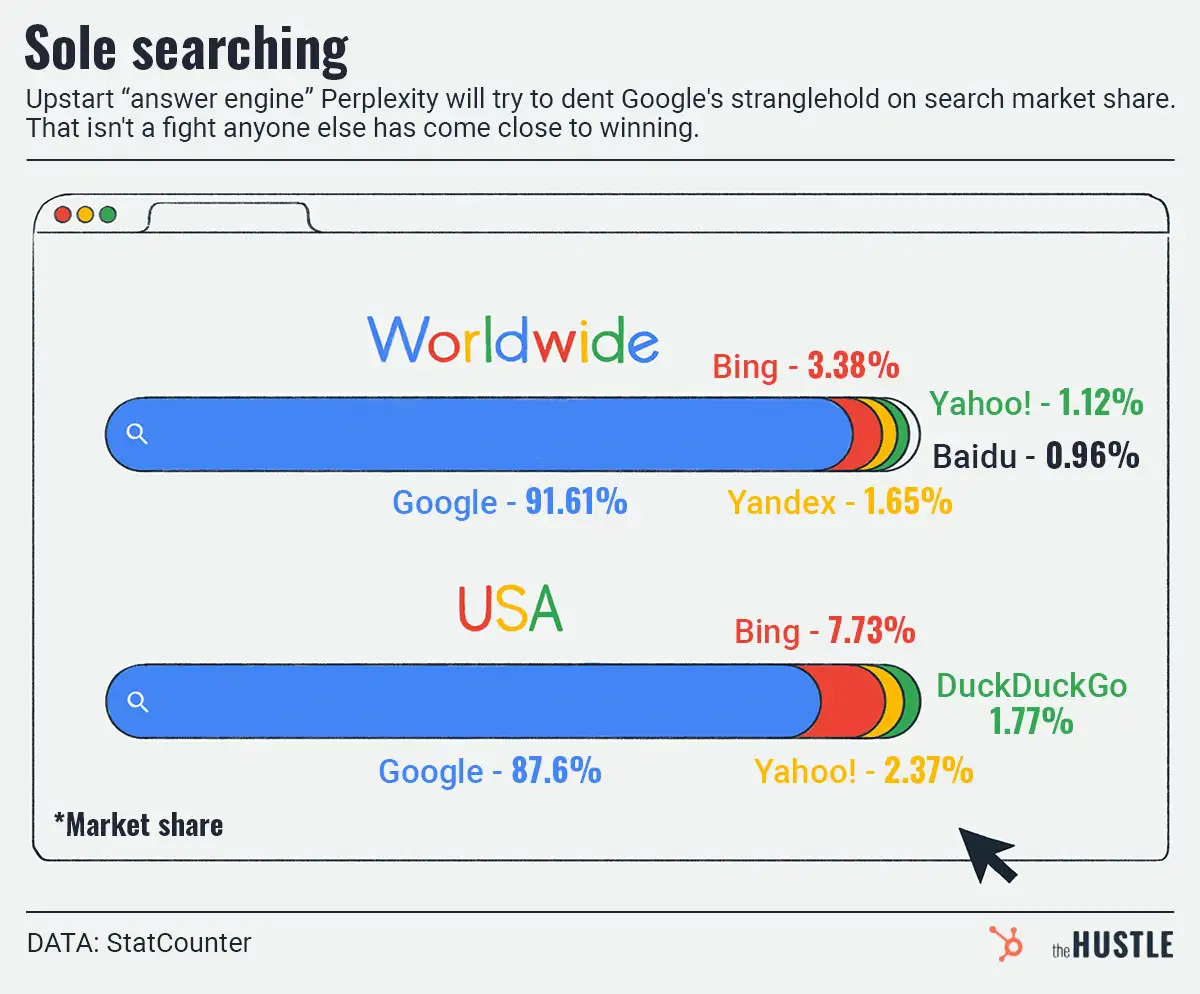

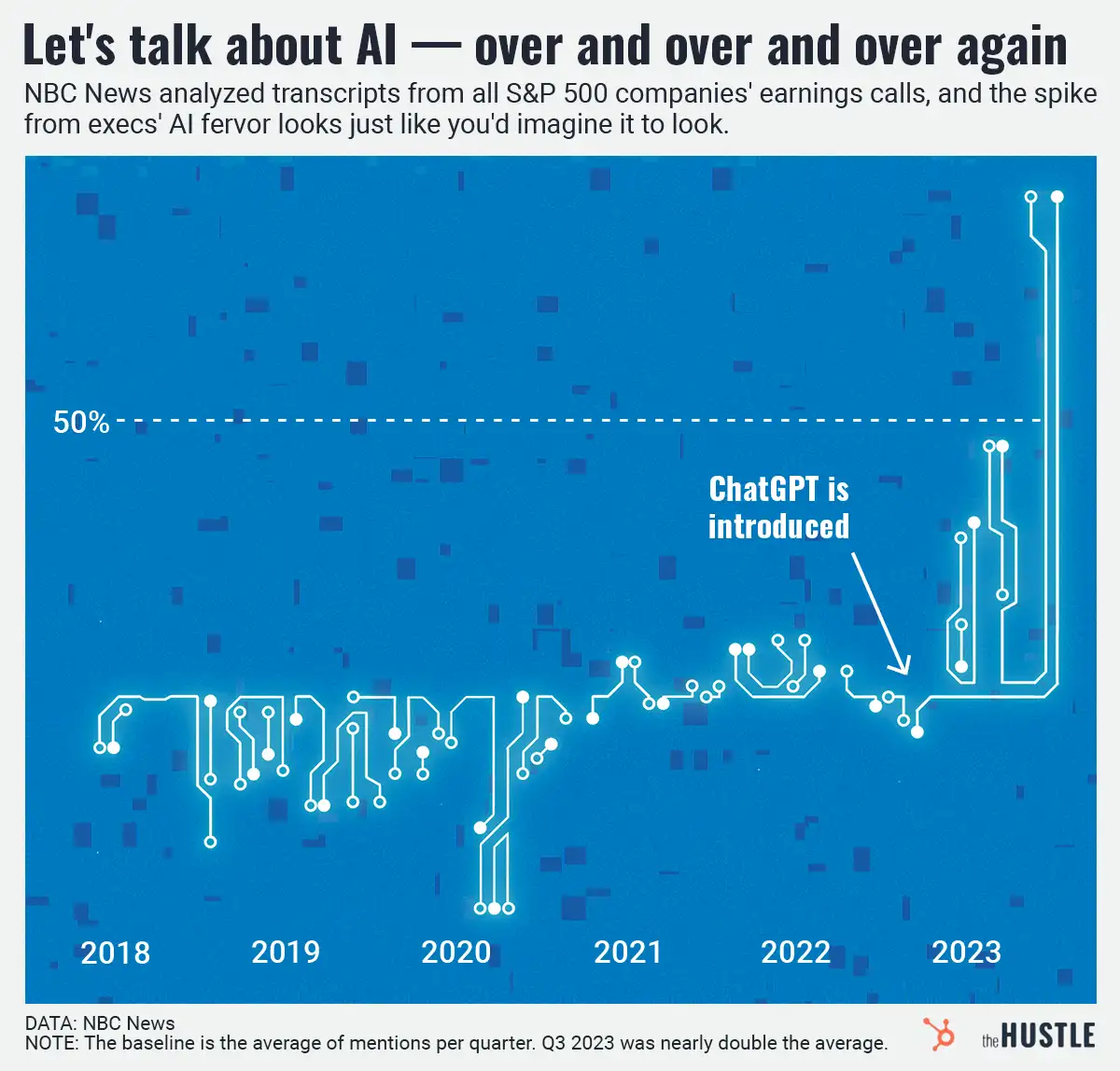

Not today. Bard, Bing, and ChatGPT are impressive, but often give us silly or wrong answers. They’re also meh at creative endeavors, derivative of the data they’re fed.

But Google’s director of engineering, Ray Kurzweil, thinks singularity is already coming — and will be here by 2045.

So, the machines are def going to end us

Not necessarily. For every Terminator or M3gan, there’s an R2-D2 or Data (a crucial member of Starfleet!).

The optimistic view — one shared by Kurzweil — is that we’d work in tandem with machines to better ourselves and society.

Yet other experts worry:

- An open letter to temporarily stop developing AI more powerful than GPT-4 has garnered signatures from Apple co-founder Steve Wozniak, Elon Musk, and Getty Images CEO Craig Peters.

- AI scientist Geoffrey Hinton exited Google to openly discuss the risks posed by the technology he pioneered.

- In 2021, 193 countries agreed to UNESCO’s recommendations on the ethics of AI to help establish a global standard for regulation.

But all in all? Time will tell. (Eyes phone suspiciously.)