At 300 Funston Street in San Francisco’s Richmond District, there’s an old Christian Science church. Walk up it’s palatial steps, past Corinthian columns and urns, into the bowels of a vaulted sanctuary — and you’ll find a copy of the internet.

In a backroom where pastors once congregated stand rows of computer servers, flickering en masse with blue light, humming the hymnal of technological grace.

This is the home of the Internet Archive, a non-profit that has, for 22 years, been preserving our online history: Billions of web pages, tweets, news articles, videos, and memes.

It is not a task for the weary. The internet is an enormous, ethereal place in a constant state of rot. It houses 1.8B web pages (644m of which are active), and doubles in size every 2-5 years — yet the average web page lasts just 100 days, and most articles are forgotten 5 minutes after publication.

Without backup, these items are lost to time. But archiving it all comes with sizeable responsibilities: What do you choose to preserve? How do you preserve it? And ultimately, why does it all matter?

Alexandria 2.0

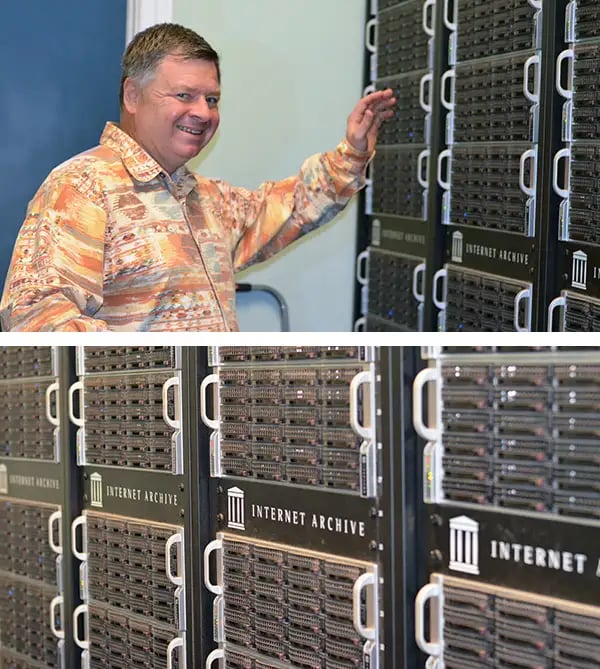

By the mid-’90s, Brewster Kahle had cemented himself as a successful entrepreneur.

After studying artificial intelligence at MIT, he launched a supercomputer company, bootstrapped the world’s first online publishing platform, WAIS (sold to AOL for $15m), and launched Alexa Internet, a company that “crawled” the web and compiled information (later sold to Amazon for $250m).

In 1996, he began using his software to “back up” the internet in his attic.

His project, dubbed the Internet Archive, sought to grant the public “universal access to all knowledge,” and to “one up” the Library of Alexandria, once the largest and most significant library in the ancient world.

Over 6 years, he privately archived more than 10B web pages — everything from GeoCities hubs to film reviews of Titanic. Then, in 2001, he debuted the Wayback Machine, a tool that allowed the public to sift through it all.

Wayyyyy back

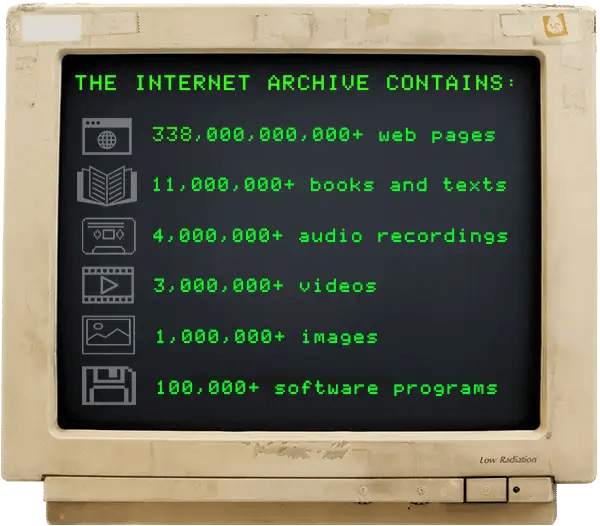

Today, the Wayback Machine houses some 388B web pages, and its parent, the Internet Archive, is the world’s largest library.

The Internet Archive’s collection, which spans not just the web, but books, audio 78rpm records, videos, images, and software, amounts to more than 40 petabytes, or 40 million gigabytes, of data. The Wayback Machine makes up about 63% of that.

How much is this? Imagine 80 million 4-drawer filing cabinets full of paper. Or, slightly less than the entire written works of mankind (in all languages) from the beginning of recorded history to the present day.

By comparison, the US Library of Congress contains roughly 28 terabytes of text — less than 0.1% of the Internet Archive’s storage.

In any given week, the Internet Archive has 7k bots crawling the internet, making copies of millions of web pages. These copies, called “snapshots,” are saved at varying frequencies (sometimes, multiple times per day; other times, once every few months) and preserve a website at a specific moment in time.

Take, for instance, the news outlet CNN. You can enter the site’s URL (www.cnn.com) in the Wayback Machine, and view more than 207k snapshots going back 18 years. Click on the snapshot for June 21, 2000, and you’ll see exactly what the homepage looked like — including a story about President Bill Clinton, and a review of the new Palm Pilot.

Every week, 500m new pages are added to the archive, including 20m wikipedia URLs, 20m tweets (and all URLs referenced in those tweets), 20m WordPress links, and well over 100m news articles.

Running this operation requires a tremendous pool of technical resources, software development, machines, bandwidth, hard drives, operational infrastructure — and money (which it culls together from grants and donations, as well as its subscription archival service, Archive-It).

It also requires some deep thinking about epistemology, and the ethics of how we record history.

The politics of preservation

One of the biggest questions in archiving any medium is what the curator chooses to include.

The internet boasts a utopian vision of inclusivity — a wide range of viewpoints from a diverse range of voices. But curation often cuts this vision short. For instance, 80% of contributors to Wikipedia (the internet’s “encyclopedia of choice”) are men, and minorities are underrepresented.

Much like the world of traditional textbooks, this influences the information we consume.

“We back up a lot of the web, but not all of it,” Mark Graham, Director of the Wayback Machine, told me during a recent visit to the Internet Archive’s San Francisco office. “Trying to prioritize which of it we back up is an ongoing effort — both in terms of identifying what the internet is, and which parts of it are the most useful.”

The internet is simply too vast to fully capture in full: It grows at a rate of 70 terabytes — or about 9 of the Internet Archives’ hard drives — per second. It’s format changes constantly (Flash, for instance, is on its way out). A large portion of it, including email and the cloud, is also private. So, the Wayback Machine must prioritize.

Though the Wayback Machine allows the public to archive its own URLs using the site’s “Save Page Now” feature, the majority of the site’s archive comes from a platoon of bots, programmed by engineers to crawl specific sites.

“Some of these crawls run for months and involve billions of URLs,” says Graham. “Some run for 5 minutes.”

When the Wayback Machine runs a crawl, the human behind the bot must decide where it starts, and how deep it goes. The team refers to depth as “hops:” One hop archives just one URL and all of the links on it; two hops collects the URL, its links, and all of the links in those links, and so on.

How, exactly, these sites are selected is “complicated.” Certain bots are dedicated solely to the 700 most highly-trafficked sites (YouTube, WIkipedia, Reddit, Twitter, etc.); others are more specialized.

“The most interesting things from an archival perspective are all of the public pages of all of governments in world, NGOs in world, and news organizations in the world,” says Graham. Getting access to these lists is difficult, but his team works with more than 600 “domain experts” and partners around the world who run their own crawls.

Archiving in the post-fact era

From its inception, the Wayback Machine has given website owners the ability to opt-out of being archived by including “robots.txt” in their code. It has also granted written requests to remove websites from the archive.

But this ethos has changed in recent years — and it’s indicative of a larger ideological shift in the site’s mission.

Shortly after Trump’s election in November of 2016, Brewster Kahle, the site’s founder, announced intentions to create a copy of the archive in Canada, away from the US government’s grasp.

“On November 9th in America, we woke up to a new administration promising radical change,” he wrote. “It was a firm reminder that institutions like ours… need to design for change. For us, it means keeping our cultural materials safe, private and perpetually accessible.”

According to anonymous sources, the Wayback Machine has since become more selective about accepting omission requests.

In a “post-fact” era, where fake news is rampant and basic truths are openly and brazenly disputed, the Wayback Machine is working to preserve a verifiable, unedited record of history — without obstruction.

“If we allow those who control the present to control the past then they control the future,” Kahle told Recode. “Whole newspapers go away. Countries blink on and off. If we want to know what happened 10 years ago, 20 years ago, [the internet] is often the only record.”

At the Internet Archive’s sanctuary, the pews are lined with statues of long-time employees — techno-saints who’ve crusaded for free, open access to knowledge.

Above them, housed in a pair of gothic arches, 6 computer servers stand guard.

The $60k machines are made up of 10 computers a piece, with 36 8-terabyte drives. Each piece of hardware contains a universe of treasures: 20-year-old blog posts, old TED talks, tomes forgotten by time.

When someone, somewhere in the world is reading a piece of information, or looking at an archived webpage, a little blue light pings on the server.

Standing there, watching the galactic blips illuminate the racks, you can’t help but feel you’re seeing an apparition: The web is ephemeral. It rots, dies, and 404s. But it is alive even in death — and it will remain long after we’re gone.